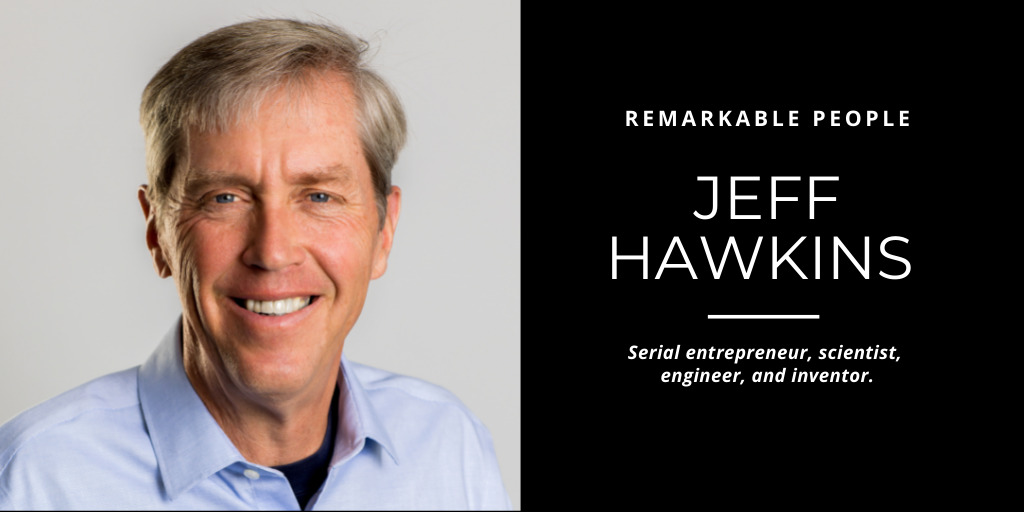

Jeff Hawkins founded two mobile computer companies: Palm and Handspring. If you’re not too young, you may have heard of their products: the PalmPilot and the Treo smartphone.

Anton Chiang from Cupertino, CA, USA, CC BY 2.0, via Wikimedia Commons

More recently, Jeff founded the Redwood Neuroscience Institute, a scientific organization focused on understanding the neocortex. His latest company is Numenta. It is investigating brain theory and artificial intelligence.

He has written two books. His latest is called A Thousand Brains: A New Theory of Intelligence. In this book and in our interview, Jeff covers topics such as:

🧠 How the brain makes sense and how it is deceived

🧠 A different model for the brain–as opposed to a fast computer

🧠 Why AI isn’t the big threat to mankind–yet anyway

I also learned why I look at my Yeti coffee cup completely differently after reading his book.

Jeff has a B.S. in Electrical Engineering from Cornell University. He was elected to the National Academy of Engineering in 2003.

Be inspired by Jeff Hawkins on Remarkable People:

If you enjoyed this episode of the Remarkable People podcast, please head over to Apple Podcasts, leave a rating, write a review, and subscribe. Thank you!

Join me for the Behind the Podcast show sponsored by my friends at Restream at 10 am PT. Make sure to hit “set reminder.” 🔔

Text me at 1-831-609-0628 or click here to join my extended “ohana” (Hawaiian for family). The goal is to foster interaction about the things that are important to me and are hopefully important to you too! I’ll be sending you texts for new podcasts, live streams, and other exclusive ohana content.

Please do me a favor and share this episode by texting or emailing it to a few people, I’m trying to grow my podcast and this will help more people find it.

Great episode of my favorite podcast, Remarkable People. Click here to listen 🎧 Share on XJeff Hawkins Combined

Enter your name and email address below and I'll send you periodic updates about the podcast.

I'm Guy Kawasaki, and this is Remarkable People. Our remarkable guest is Jeff Hawkins. Jeff founded two mobile computer companies: Palm and Handspring. If you're not too young, you may have heard of their products: the PalmPilot and the Treo smartphone.

More recently, Jeff founded the Redwood Neurosciences Institute, a scientific organization focused on understanding the neocortex. His latest company is Numenta. It is investigating brain theory and artificial intelligence. He has written two books. His latest is called A Thousand Brains: A New Theory of Intelligence.

In this book and in our interview, Jeff covers topics such as how the brain makes sense and how it is deceived; a different model for the brain as opposed to a fast computer; and why AI isn't a big threat to mankind-- yet anyway.

I also learned why I look at my Yeti cup completely differently after reading his book.

Jeff has a BS in electrical engineering from Cornell University. He was elected to the National Academy of Engineering in 2003. I'm Guy Kawasaki, and this is Remarkable People and now here's the remarkable Jeff Hawkins

Jeff Hawkins: Just a little tour of the brain. So we've all seen pictures of the brain. We can--or images of the brain--and if you look at a human brain, mostly what you see is this little wrinkly stuff around the outside.

The largest part of the human brain is the neocortex, and that's what it is. And it's a sheet of cells. It's about the size of a dinner napkin and about twice as thick. It's only about two and a half millimeters thick, and it wraps around the rest of the brain. So that neocortex is the organ of intelligence. It's the part that we see with, hear, and language and think. My neocortex speaking and yours is listening right now.

Inside of that, there's a whole bunch of other smaller brain structures. These include everything starting actually from the spinal cord to the brain stem, and there's lots of these other little pieces that do various things. So the other parts of the brain--and sometimes they're referred to them as the older brain or I use that in the book "old brain" because some of them are evolutionarily older.

So spinal cords are older than the neocortex. Simple animals have a spinal cord., and so these other ones do they control these other parts of the brain control our basic bodily functions: breathing, heart rate, eating, sex, emotions, even basic behaviors. But mammals have a neocortex and in humans our neocortex is really big, that's why it occupies so much of our skull, and seventy percent of the volume of our brain.

And that's why we're intelligent because we have this big… and that's the part of the brain that, that we study; we want to understand exactly in detail, uh, what it's doing and how it does it. And that's in some sense the greatest scientific challenge of all time.

Guy Kawasaki: I understand now “old brain” versus “new brain,” what about left brain versus right brain? Or is that just something that is a social convention?

Jeff Hawkins: There's differences pinning the left brain and the right brain. So there's no question about that, but I think it's well overblown. We don't really make that distinction. I should go back to the “old brain, new brain” thing because I didn't finish the picture.

When you're thinking, that's the neocortex-- it’s doing the thinking. But the neocortex itself does not control any of your body. It cannot directly control any muscles, and so when the neocortex wants to do something, it sends signals to the other parts of the brain that actually can control muscles and says, "Would you please do this?"

I gave you so far a very simple example would be breathing. Normally you breathe without any thought. You breathe in your--during your sleep. It's not something you have to think about, but your neocortex can say, "Well, I want to hold my breath right now,” or “take a deep breath right now."

The neocortex could say, "Please do this," but if the old brain says, "I've had enough of this. We need some oxygen," it takes over again. So part of the theme of my book, and actually how the brain works, is to realize that the neocortex is not really in control all the time.

There's a battle between these two: between our emotional states, and some of them are primitive needs and some of them are more rational thinking that we do. And the left and right side stuff is not something we pay a lot of attention to in our work. It's not really--almost every--most everything we do on both sides of the brain.

So vision occurs in both sides of the brain: touching, language is one of the very specific--one of the very few things that really occurs on one side of the brain--it's on the left side, and there's some theories why that's true but it doesn't really change the way we think about the neocortex as a whole.

Guy Kawasaki: And when people say the reptilian brain, what are they referring to?

Jeff Hawkins: It's a bit of a misnomer, and so that phrase has become sort of “looked down upon” these days. But what they're referring to is the--if you look at a reptile and you say, "Okay what does the reptile brain look like?” It kind of looks like a human brain but it doesn't have a proper neocortex.

It has a lot of the others--we share a lot of other things and so one could argue that the argument was that if you take away the neocortex, what you're left is with the reptilian brain. And the reptile, it mates, it has children, it hunts for food, it knows where it is, it can solve problems.

So we are like that too. The idea of the reptilian brain is that we have these more primitive behaviors, and this is true, we do, but on top of that we have this newer structure, the neocortex. The reptilian brain is become out of favor now so people don't like to say that. It’s a little non-PC or something or for some reason.

Guy Kawasaki: Now that we got the basics, what is, what is your current working model for the brain? It's not the computer IO device, right? It's--it's not the neural network. What is it in your eyes?

Jeff Hawkins: We're mostly focused on this one part of the brain which is seventy percent of the human brain and it's the part that makes us smart, so we're just going to talk about that--and the, the highest way you can think about it is ‘it's not a computer.’ It's tempting to think it's a computer.

You might say, "I get these inputs from my eyes and my skin and then I process them and then I do something," but that's not the right way to think about it. The neocortex is a modeling system--It builds a model of the world. It recreates the world inside of your head. And we use that model for thinking; we use that model for basing our actions.

So if your listeners are listening to this podcast right now, some of them may not be doing anything else. They’re maybe just sitting there chilling, listening to this podcast. And what are they doing? They're doing something, but they're not acting, right? Are they computing something? Not really. What they're doing is they're updating their model of the world.

So right now they may have learned some new things. They may have learned about the neocortex or why we don't use the word ‘reptilian brain’ or things like that. So what we're constantly doing is, or when we're living our life, we're building this very rich model of the world. We can talk about that inside of your head.

Everything you know is in this model in the world. And then later we can use the model to say, "Oh, where am I?" or "What's going to happen?" or "What's likely going to happen?" or if I want to accomplish a certain task, how would I go about doing that? And we do this using the model in our head.

We think about it and then we can act based on that, but we don't have to act. We can--we can just think through these scenarios in her head. So that's what's going on--it's a model-building system, and what we've discovered is how the actual neurons in your head--the cells in your head--do this, and it was very surprising.

It wasn't like we anticipated, but we now have a pretty good idea of what's going on in your head when you're thinking and when you're acting and when you're learning and when you're seeing the world.

Guy Kawasaki: So if I got this right, the gist of your book is that the brain contains many models of objects.

Jeff Hawkins: It's many models of everything. So I just said the neocortex, again, I'm going to be specific, Guy, it’s the neocortex that contains this model of the world, and that idea itself is not terribly controversial. A lot of people understand that, but what we discovered was it's not a single model.

It's composed of tens of thousands--actually over a hundred thousand models--modeling system. It's like you have 150,000 brains in your head. 150,000 little modeling systems in your head. It's not one-- it's not one monolithic model. It's lots of these little models that are complimentary to each other.

There are some models that relate to vision and other models relate to sound and other models relate to touch. And we don't perceive the world this way. We have a singular perception of the world as if, “oh, I'm one thing. I'm one place.” I don't feel like, “oh, I'm a-a hundred thousand things,” But that's the way it's structured.

And we can explain how those individual models work and how they work together to give you this singular perception. One of the big things that came out of our discoveries is that there are -that basically- there are many, many thousands of models of everything you know.

If I ask myself, "Where do I store knowledge about the coffee cup?" which is an example I use in the book, it's not in one place in your brain. We don't have one model of coffee cups, we have many thousands of models to the coffee cup. There's some of what the coffee cup feels like; There's some what the coffee cup looks like; There's some relate to what the sound's a coffee cup makes when you put it on things. And these models all get united together to a single percept but they work independently in some sense.

Guy Kawasaki: I have to say that having read your book, I just cannot look at my Yeti coffee cup the same anymore.

Jeff Hawkins: I know.

Guy Kawasaki: I used to just think it's a cool coffee cup and now I'm thinking, “I wonder which model I'm processing now.”

Jeff Hawkins: It's so funny. You used to look at the world an-and you see things like a coffee cup or a chair or a bicycle and you say, "Well, I see what it is,” but that's not really true. You have this ‘model’ of this thing in your head and what you're doing is--you have this very impoverished input stream coming from your eyes and your skin--it's not like you think it is. It's very impoverished. It's very little information coming into the brain and yet what you perceive is the model itself.

You think you're looking directly at the coffee cup--and you are in some sense – but what you perceive: the color, the shape, all these things, these are actually part of the model in your head. For example, colors don't exist in the world. There is no such thing as color in the world.

There's frequency of light, which is not the same, and what's going into your head are these little spikes on the neurons, and they don't really say anything about color; they're just spikes, and yet we perceive color. It's a creation of the brain. It's not a reality.

It's a reflection of reality but actually the way we perceive the world--so looking at your coffee cup, your perception of the coffee cup is all inside of your head. It's a fabrication of the model of you have of coffee cups.

Guy Kawasaki: So as an aside, two things: One, it seems like that 150,000 number is a low number, and also, why would you call your book A Thousand Brains if there's 150,000 brains?

Jeff Hawkins: Oh, okay. You're the first person to ask me that question and I really worried that people would ask me that question a lot. Well, first of all, I don't know how you think 150,000 is a small number. It clearly--there are billions of neurons in your brain, billions of cells, but each of these modeling systems is very complex. They’re purely complex systems.

So 150,000 complex systems is not that few, especially since most people would think we'd have only one. You think you'd have one model of the world, not 150,000 of them, so from that point of view it's a big number.

Yes, technically the theory should be called “the 150,000 brain models of the world,” but you're a marketing guy, right? That doesn't come up--that doesn't come off your tongue very well, does it?

So the excuse I have for that is you have 150,000 modeling systems in your head. If I asked myself if I want to think about a coffee cup, they don't all have models. A coffee cup--only a small subset of those have been modeled into a coffee cup until there'll be thousands of those. We call it cortical columns, that's the term in the neuroscience: the cortical column. It'd be thousands of them that would be actually modeling the coffee cup.

So I could argue the thousand-brains theory could refer to the number of models you have of a particular thing and it'd be thousands of those models.

Guy Kawasaki: Or you could just say, “It's a better title.”

Jeff Hawkins: Yeah, it’s a really important discovery that can really impact the world and we needed to come up with some way of referring to it, and as scientists you're not really encouraged to do that. You're supposed to be very dry and- your papers are really hard to read and it kind of--that's the way it works. But we realized that we needed a way to capture this idea, that something would refer to it, and so A Thousand Brains Theory of Intelligence is what we came up with.

Guy Kawasaki: Let's say that you and Malcolm Gladwell are having a beer.

Jeff Hawkins: Okay.

Guy Kawasaki: Could one make a case that an expert in Malcolm Gladwell's perspective who can make a correct decision in the blink of an eye, would Jeff Hawkins say, "That expert has more--or better--neocortal columns than anybody else.”

Jeff Hawkins: I would say the design is better. They're more- They’re trained differently. What makes an expert is you spent a lot of time on a particular domain or topic. And, you've built up a model, even a topic like marketing or a topic like democracy or anything like that; You have models of these things in your head and you have built a sophisticated model in which case you can immediately recognize something and say, "I know what that is. I've seen things like this. I already- I understand this field very well and I can very quickly make a decision."

It's not that my columns are better, or my neocortex is better, it's just that I've spent more time studying that topic and I've spend more time gaining knowledge and building a more sophisticated model of it.

It's a little bit like mathematicians. If you're a mathematician, you spend your days looking at numbers and equations and so on. You can look at an equation and it's like an old friend. It's like, “Oh yeah, I know that person. I know how they behave. I know we did this together, and we did that together,” right?

And you immediately have these--in the same way you might say, “Oh, that's--that's my friend from high school. We did all these things together.” And but if you're not a mathematician, then could be like Greek. It can be like, “what the hell? I don't know what this going on here.” And then you have to think about it a lot more.

So these sort of very quick intuitions are not coming from nowhere. They're coming from the fact that you've studied something in the world and you have a model of it and that model is rich and it gives you an answer right away. It says, "Okay, yeah, we know what this is,” and I know how it behaves and therefore I can give you a quick answer.

If you haven't studied that, then you go, “Well, I don't understand this,” and I look at the list of facts and try to figure out what's going on.

Guy Kawasaki: How does a scientist prove something like the neocortex is generally the same material and what matters is where it's connected not what it's made of? How do you prove something like that or investigate something like that?

Jeff Hawkins: Sure, so that hypothesis you just mentioned was one that I talk about in the book that came from a neurophysiologist named Vernon Mountcastle. And so he speculated this – to tell you what that is--that when you look at the neocortex, he said, “Look, the neocortex is going to be working on the same principle everywhere,” right? And somehow that the parts of the brain--parts in the neocortex that do language, vision, and touch are all doing the same basic output. So that was the thing you just mentioned, say we can ask, "How did he prove that?"

To be totally nerdy here, you can't prove anything. You can only disprove things, but you can build evidence to support things. And if you get enough evidence to support something, then people say, “yeah, okay, it's probably true." So, in this case, this particular example you mentioned, which is proposed by Vernon Mountcastle in 1979, there was a lot of pushback on it.

A lot of people didn't believe this was true, or they didn't think it would be likely to be true. So, he spent a good portion of his life trying to build evidence for it. How did he do that? Well, he was a neurophysiologist so he did a lot of it by actually studying brains and collecting data from the brains--and we can go into the details if you want to--and publishing papers saying, "See, it looks like I said it was." And then other people would test it too.

And just to give you an example, there's a famous experiment done by a guy named--I'm going to mispronounce it here. His last name is Suret--where Mountcastle literally said, “Hey, if I take a part of the neocortex that’s connected to eyes and sees, if I rewrite it and connected to the ears, it should hear,” and that's part of--it's a process.

So this guy Suret did this experiment. They took these ferrets that were--that had an embryo, then they rerouted the circuitry in the cortex and sure enough the ferrets ended up seeing what the parts of the brain was supposed to hear and hearing parts of the brain is supposed to see. So that's just one more piece of evidence, but mostly you spend--you collect all this data over the years, and you make your case that this data supports my hypothesis. That's the scientific method, and it works pretty well. It can take a long time sometimes, but it works pretty well.

Guy Kawasaki: Did this kind of work lead you to a belief in Non-Theism? Is that how you arrived at this?

Jeff Hawkins: First of all, if you’re asking if I'm a non-theist and the answer is I am a non-Theist. I grew up in a non-religious family. It wasn't that we were atheist per se, it's just- we didn't go to church. We didn't have a religion, you know? It was--it was, I didn't even know it was something that was missing in my life.

It wasn't like, “Oh, let's be different.” It was like, “Oh, that's my family.” It’s just--that's what we did and so I have been what you might call a “free thinker” or a “rational person.” My whole life I've always felt like I believe there's a rational explanation for everything that goes on in the universe.

And so that pre-dated any of my interests or work in--in neuroscience. I didn't--I didn't come to be a non-theist by studying the brain, I just never was a theist to start--just never was, yeah.

Guy Kawasaki: I-I don't think the existence of God is provable, but that doesn't mean he or she does not exist, right?

Jeff Hawkins: Of course, right, you--you, but there's a lot of evidence that suggests that there isn't a God. Here's the thing, I’ll tell the story briefly. I tell the story at one point--in the end part of the book--I told a story about an experience I had in grade school, and I think it was, like, second grade or something like this. We were really young.

I was probably like, you know, seven or eight years old. And--and these kids were--we were all in the playground at school. This was in Greenlawn, New York, and for whatever reason the kids were going around talking about their religions.

And they all talk-- and it was like, it was a fun conversation. It wasn't that – there is no confrontation going on, and he was like, "Oh yeah, well, we're -we're Hindi and this is what we believe." "We're this Pentecostal Christians and this is what we believe." Someone says, "We're Jewish and this is what we believe in." And so they're going around and trying to figure out what the differences is between their religions.

And also, this is fascinating because I didn't know most of this. I hadn't been exposed to this. And then they came up to me and they said, "Jeff, what's your religion?" I said, "Well, I don't think I have one." And they said, "That's not possible. And someone said, “you have to believe in something. What do you believe in?"

I'm like, “Oh my God,” you know, “I'm on the spot here.” But what I realized at that moment, very clearly--if I hadn't realized it before--was that these people believed different things and it couldn't all be right. That's not possible. If something's A or B, it can't be both, that's at least in my opinion. It seemed obvious to me.

And I said, they're all different. And yet they all different--believe different things. Isn't this bothersome to them? Don't they want to find out who's right? Which one is the right one? And, and it--and it, it just struck me as well.

Then, almost certainly, they're all wrong because why would one, why would just one religion be the right one, the other one's the incorrect? Well that doesn't make any sense to me. And they weren't being questioning about it. So that's an attitude I've had in my whole life.

It’s like, “well, yeah, there could be some proof to religion, but I haven't seen it.” And “why are there different ones?” And--and some people believe that--different gods and there's sometimes people believe in multiple gods, just as much as people now believe in Monotheism. So I feel like, there's too much--too much noise in that space.

And--and it's probably, at least, they can't all be right so I'll say all of them have got to be wrong. I didn't know if there was going to be a conversation about religion.

Guy Kawasaki: You didn't know I was going to ask you why your title is only 1,000 either.

Jeff Hawkins: How would you describe your religious belief? What would you call yourself?

Guy Kawasaki: I think, having worked for Apple, I have learned that some things need to be believed to be seen and that includes Apple's success and God. How's that?

Jeff Hawkins: But would you subscribe yourself in a particular religion? Would you or are you an independent believer?

Guy Kawasaki: That's a really complex question because until about four years ago, I would have said, "Yeah, I'm a Christian," but now when you say you're a Christian that parses to very different things, four or five years ago.

Jeff Hawkins: Oh, interesting.

Guy Kawasaki: I used to tell people I'm an evangelist, but a lot of people don't make the differentiation between an evangelist and an evangelical, and believe me, that is, you know? A very dangerous…

Jeff Hawkins: Didn't you- did you coin the term “evangelists?” Wasn't – aren’t you the first?

Guy Kawasaki: Well, I mean, there was Jesus before me but it really was Mike Murray.

Jeff Hawkins: No, no. As a corporate title.

Guy Kawasaki: That was Mike Murray and the Macintosh Division.

Jeff Hawkins: Oh, okay. All right.

Guy Kawasaki: Between Jesus and Mike Murray, there was a 2,000 year gap, but anyway.

Jeff Hawkins: Yeah.

Guy Kawasaki: Do you think that the function of the old brain can explain things like war and hate and poverty?

Jeff Hawkins: To some extent, I-I think that's right, but let's back it up a little further than that. I don't know if you believe this or not, but, I believe in evolution and that has occurred and humans have evolved to get here. And, there's a famous book that I mentioned in my book, called The Selfish Gene by Richard Dawkins, where he describes that evolution really is all about individual genes.

It's not about species, and so evolution is there to help genes replicate and we as a species, and we as individuals, are really evolved to support the replication of genes. So now replication of genes, which is we can think of it like having children but it's a bit more subtle than that. The only thing that matters is success and success in replication.

It doesn't have to be pretty. It doesn't have to be nice. It doesn't have to be anything else. So there are things that are in our background or that we all have, or some of us have, that we would consider really bad traits, but are successful from an evolutionary point of view-- from the genetic point of view.

So you think about something like genocide, which is a terrible thing. But in some situations it can benefit the genes, or rape can benefit genes. And so many of the things we looked down upon as a society, as a culture and humanity, have evolutionary background to them. At one point in time--or continued to some places in the world--continue to be successful strategies.

Now so does nurturing and friendliness and being nice to people. Those are advantaged too. But what- so what we see is this is the mix of these sort of things that we like and things we don't like, but they're all successful strategies. Now this gets reflected not just in the genes but of course in how we behave.

So your question about the old brain is in some sense, yes, the drive to have sex is an old brain thing. Right. And sometimes it's nice and sometimes it can be pretty bad and nasty. The drive to- to accumulate status in a society. Well, we can do that in good ways, we can do it in bad ways, but those are strategies that have helped genes in the past.

And we all have these to some degree or another in our brain structures and our genetic background. And like I said earlier, we can vary in how our new brain and our neocortex can control and make us behave well. We all know the right thing to do, or at least most of us do, but sometimes people don't really do the right thing.

So how much can our rational thinking sort of keep the primitive behaviors in check is an interesting question and clearly we don't do it well enough.

Guy Kawasaki: So can you tell me what is truly an intelligent machine and how will we recognize it?

Jeff Hawkins: So you can think about today's AI as it's all been driven by a certain sort of paradigm, and the paradigm is: take a task that humans can do and try to get a machine to do it. And if the machine can do it as well as a human, that's good. If it can do it better than human, that's even better, and then that machine is at least on its way to being intelligent.

Even if we don't think it's intelligent yet, it's on its way to being intelligent. In the parlance of AI and computers, we call those “benchmarks,” you know? There's all these different benchmarks, “Oh, who can do the best recognizing these images?” “Who can do the best understanding of these language phrases,” and things like that.

But that's not what intelligence is about. And- and so most AI which I’ll admit today's machines really aren't intelligent, but- but I make the argument in the book that intelligence can be determined by working on a set of principles. That if machine works on a set of principles, regardless of what it does--regardless of whether it does something a human can't do, or it does it better than a human or not--if it’s working under those principles, then we would say it's intelligent.

And part of what I did in this book--the whole second section is part of this--is laying out what those principles are. Like, if we build a machine that has- that works under these principles, it will be intelligent. It's very analogous to computers.

We--we have something in computing called the “Universal Turning Machine” which essentially any computer that has memory and a CPU and an instruction set and some software is capable of computing anything, and it's called a Universal Turing Machine.

And so even the teeny little computer in my coffeemaker is an example of a Universal Turing Machine. And so it’s this big machine that's in some cloud someplace, but they all have the same principles.

They all work on the same principles. And so we don't question whether my coffee pot has a computer in it just because it doesn't do too many things. We have to get to the same way of thinking about intelligent machines. Intelligent machines are going to be built on a set of principles which I outline.

And if they have those principles, we'll say it's intelligent. It may not be as intelligent as a human. It may not even do anything like a human, but I think a mouse is intelligent. It's not like, like human-like intelligence, but it works on the same principles that you and I work

Guy Kawasaki: A computer mouse or an analog?

Jeff Hawkins: Oh, I'm sorry. A biological mouse.

Guy Kawasaki: Okay.

Jeff Hawkins: Sorry. A biological mouse.

Guy Kawasaki: Just making sure.

Jeff Hawkins: A rat. Rodent. Yes, yeah. You got to get- You got to get out of that computer world, Guy. It's funny. It hadn't occurred to me to think of a computer mouse.

Guy Kawasaki: Well, Douglas Englebart.

Jeff Hawkins: We have examples of all kinds of animals that are intelligent, and they all have got to work on the same principles, but that they don't have to be as smart as or do the same things as the human does. So when we talk about intelligent machine, they have to work on a set of principles. Until very recently, no one knew what those principles are, and I'm arguing that we figured them out. And that's why I wrote the book, and that's why this second section on AI I talks about them in depth.

Guy Kawasaki: So it's not that big a deal that Big Blue can beat a chess master or go master?

Jeff Hawkins: Well, it's a big deal if you’ve spent two years of your life trying to make it do that, then it would feel pretty good.

Guy Kawasaki: That's not the test for a big deal, but okay.

Jeff Hawkins: I guess it was Big Blue beat the chess master and Watson beat the Jeopardy game, right? And then the AlphaGo beat the go players, right? So these are three events that were heralded as landmark events in AI. And I think they're not, they were great marketing events.

They showed some technical prowess. They did things that were clever and entertaining, but did they mark a threshold where all of a sudden they said, "Oh my God, we started making intelligent machines and now it's all downhill from here?" No, not at all.

None of those advances really are intelligent in any way that you and I would think about them. And they really were point solutions to point problems. They weren't like, “Oh, we made a machine that is smart and can play Go. No, we made a machine that can play Go.

That's what we made--a machine that could play Go, and we made a machine that can play chess, okay?” We used to marvel--I have a calculator... You can buy a calculator for $10 that does mathematics a million times faster than you and I and at one point that seemed miraculous.

Now we’re just like, “Oh yeah, it says the calculator, right? It's like no big deal. We actually-- we shouldn't be amazed that we can build a machine that plays chess better than a human because maybe we should say, "Why did it take us so long?" You know? But- but it's not, but those machines are not intelligent

Today there is no machine exists that works on the principles that I think require to be intelligent. There's – they’re not even close. We can do that in a century. You can do that in a couple of decades, but we're not there yet.

Guy Kawasaki: So as it currently stands, AI is not nearly as scary as Elon Musk says it is.

Jeff Hawkins: I make that argument about-- yeah, it's not just Elon. It's-- there's a bunch of people who have argued that AI is what they call it an existential threat, meaning it could threaten the existence of humanity. I don't think that's right at all. And I don't just state it as you know, “I don't believe you.” I take it apart and I look at the arguments and explain why it's not an existential threat.

And we we've already touched on it briefly. What people assume is that when we create really intelligent machines, that they're going to be like humans, and they assume they're going to want the same desires as humans or the same motivations. Or they'll feel the same sort of emotions. Like, you know, they won't want to be--they won’t like being, you know, servants to us and they'll want to be free of us, or they'll- they'll develop their own motivations about what's important in the world and so on.

But the part of our brain-- the neocortex-- it's really the intelligent part. It doesn't have those drives and emotions. It's emotionless in some sense. It's affected by our emotions, but it doesn't generate them. And so you can be intelligent without having human-like drive and emotions and they're not going to just appear automatically.

It's like if you make something smart, it's going to all of a sudden say, "Whoa, I woke up and now I realize I'm enslaved and I'm not going to like this anymore." I make the analogy in the book of a map of the world. And so the model in your head and you--in your neocortex--is kind of like an analogous or a metaphor to a map of the world.

And the map of the world contains knowledge about the world and where things are, and you can use that map to do bad things, like, you could use it to say, “Oh, I'm going to now go and use this map to wage war or slay my enemy,” or you could use that map to do good things. You can do trade and distribute goods around the world.

But the map itself doesn't have those drives and emotions. So when we build intelligent machines, the intelligent part is- is not going to have these drives and emotions. It's just going to be intelligent. It's going to have this model of the world and how we apply it is up to us. That is, we could build machines that are used for bad things -Intelligent machines are used for bad things.

And we could build them to do good things. And if I suppose it's even possible that we could build the machines that have their own motivations and drives, that would be like recreating the other parts of the brain but that's really hard to do.

And no one's doing that. We're not even talking about that. So I just want to make sure people understand that didn't intelligence itself doesn't require that these things that people fear exists. It's another step beyond that that we have to be concerned about.

Guy Kawasaki: And what would you say the ramifications are of your concept of this 150,000 brains on artificial intelligence?

Jeff Hawkins: This has been a great debate in AI for, well, a debate in AI, for a long, long time, which is okay. If we want to build intelligent machines, and a lot of people do, what's the best way of going about it? And specifically, do we have to pay attention to how brains work? Or can we just ignore how brains work and we'll figure it out on our own? The vast majority of AI research that has been conducted over the last sixty years essentially said we can ignore the brain--It doesn't matter.

When I was a--I mentioned this briefly at the beginning of the book--I was applying to be a graduate student at MIT in their AI lab. And I--I said, “I want to study the brain and use those principles to build intelligent machines.”

And I was told by the professors I met with that the brain is just a messy computer. There's no point in studying it. That's a stupid idea. And I just didn't believe them. I said, “No, the brain is…” How are we going to figure out what--the only working example we have is the brain, so why don't we say, “Where's our hubris coming from?”

We think we don't have to pay attention to the one example of something that's intelligent--We don't have to look at that. And then the other argument would be like, “Well, it's too complicated and we can't figure out how the brain works,” which is true--It's very complicated. But I always felt that the quickest way to building AI would be to first figure out how the brain works.

Even if that took forty years, I was going to be the quickest one. And so I never gave up on that. And so now we figured out a lot of these things about how the neocortex works – we have made huge progress on this. So I now can say, “Okay, these are the principles,” but now with this we have a roadmap. Now I can say, “Okay, today's AI is not intelligent, but how could we get there from here?”

What would we have to do? What new things is we have to implement to get there. And again, I lay this out in the book. This is also the work my company is doing now, Numenta, we are literally implementing this roadmap. We say, “Okay, we have to add this component and this component and this component and this component to get there.

So it gives us a blueprint for how to go about building intelligence – machines--that we didn't have before. And we'll have to see how many AI researchers I can convince of this. But today there are a lot of senior AI scientists who are kind of saying, "We're kind of stuck here. We need some new ideas," so at least I'm going to offer some.

Guy Kawasaki: So if you are successful, will it ironically lead to these fears of Elon Musk and others that AI can turn on us?

Jeff Hawkins: No, it won’t. For the same reason I mentioned earlier, they won't turn on us. It-it's just not going to happen that – you know, the same fears existed in a different time about computers or even about the steam engine if you go back to the original worries about these machines were going to take over humanity or we all lose our jobs or the computers would be outsmarting us, whatever.

You don't think about that anymore. We don't sit around going, “Oh my God, these computers are going to wake up one day,” and in--my desktop--laptop computer is going to one day say, "No, I'm not going to do what you want. I'm going to go. I've going to do a different interview.

Guy Kawasaki: Well, you- you and I use Macs. Maybe Windows users have that problem.

Jeff Hawkins: Maybe that's right. It may happen. Who knows? So- so it's funny.

I think this century--the 21st century--will be pretty much like the 20th century. In the 20th century we had pioneers: Alan Turing and John von Neumann and others. In the 1930s and early 40s, it really kind of defined what computing was. They took it from non-existent to alphabetical basis.

And then for the next sixty years, we figured out how to build those things. And we built them on the architectures that- that Turing and von Neumann laid down back in the 40s. And it really transformed our society and it disrupted huge numbers of things, but it didn't present any existential threat to humanity.

And we'd all agree, like, “These are great inventions, and now we can come--we can use our computers to create, vaccines for the- for viruses,” and things like that. The same thing's going to happen in this century. We are right now sort of at that period of time where Turing and von Neumann were just discovering these principles of intelligence.

We're just discovering what is the ingredients that make an intelligent system. And, over the coming decades, we're going to build machines that are really powerful and that will transform society in the same way computers did. But they're not a threat, just in the same way computers weren't a threat.

And I know people don't believe this, but I ask you if you're listening to this, go read the book and listen to my arguments about this because I'm very confident that these systems will not be threatening any more than a computer. I could put a computer into a missile-- a self-guided missile--and that's a dangerous thing.

But we still build computers. And so we can use intelligent machines to do bad things too, but they are not going to be an existential threat. They aren't going to one day wake up and say, "Aha! I'm free. Go away, you human."

Guy Kawasaki: The irony, if you think about it is, the true threat to humanity that we're facing right now is a virus, and the bigger picture is climate change. Neither of those require particular intelligence.

Jeff Hawkins: You can argue the two--and I kind of make this argument in the book--the true threat to our long-term survival is our own intelligence, not from the virus point of view, but from certainly from climate change.

Climate change is coming back because we have been so damn successful at replicating ourselves; reproducing and figuring out how to extract energy from the world and figuring out how to improve our lives.

So our intelligence led to this climate change crisis. It's indirectly, but it still led to it. We have other threats--nuclear weapons are still a threat. A very real threat, and those are also human created.

So our intelligence in some sense is not only – it creates threats for our long-term survival, but it also gives us the opportunity to deal with them. Of course, someone could create a virus. Now we have this CRISPR technology, people can edit genes very easily.

And so in theory that someone could actually--humans could create really bad viruses and distribute them in the world, so that's another threat we have to deal with.

Guy Kawasaki: With your new model all that you know, like, many people may be listening to this and say, “Well, this is like interesting, fascinating, scientific neuro-biological, columns and neocortex and all. After listening to this podcast, how should I change my behavior? If I'm a student, a CEO, a teacher, a parent, what can I now apply to my life going forward from your research?

Jeff Hawkins: Alright. So maybe I'll phrase this differently and say, “Well, who cares?” So we figured out how the brain works, right? What difference does it make?

Guy Kawasaki: Okay. Okay.

Jeff Hawkins: All right, let's say that. I was just giving different answers to different people. First of all, I would start--my personal interest, and I know many people share this interest too--is I wanted to understand myself and understand who I am.

I want to understand what's going on in my head when I'm thinking. What's going on there when I see something or I come up with an idea. And so there's a personal satisfaction of knowing how this stuff works and we shouldn't discount that. We are defined by our intelligence in our brain.

And if we want to understand who we are, we should know that. And it's just- just knowing something itself has its own pleasures and joys and rewards and so we shouldn't discount that.

Now obviously if you're an AI researcher, this is big impact--practical impact. If you're a neuroscientist, that’s a big impact. But what if you’re none of those things? You just never – you’re just someone else who does something else for a living. You can start-- there's a couple of things you can do.

Definitely, one: it can help you sort of understand your own biases and your own way of- of underst- why do you understand the world differently? Why do two people-- I talked about this in the book quite a bit--Why do two people starting with the same facts end up with different beliefs?

That's a practical thing to know. We're looking at a very divided country right now-- politically and socially in many ways. And we can understand how it is a two people can say, “look at the same facts,” and come to different beliefs about them. In fact, I make a pitch at the end of the book in the very last few pages that something we ought to do as a society and as individuals--this something everyone can act upon.

We should all make sure our children are trained about how the brain works in the same way we teach them how the universe works. So, like, the earth revolves around the sun. We teach them about genes and DNA.

We should teach them this because one of the things you learned is we've talked about this on and off in this conversation, that we formed these beliefs about the world, what we think about the world is based on our model in our head, and we can have different beliefs about the same facts that we talked about.

Religion is an example of that. But if we understand that this is a mechanism we can understand and that some of the things we believe may be false, even though we believe them, we just can understand that the things I believe may be false because that's built into the system--the system is prone to forming false beliefs.

And then we might just question ourselves a bi. Instead of being polarized we might say, “Okay, well maybe I'm wrong about this. Maybe they're wrong about it,” but we at least have a common ground to say we shouldn't just accept the fact that because my parents told me something that's correct, or that my culture says this, another culture says that, my culture is correct.

Maybe they're both wrong. Maybe one's right. Maybe one's wrong. Who knows? But if everyone understood this from the very early age, like, “Oh yeah, by the way, not everything you perceive in the world is correct and here's examples of how it happens in humans. And if you want to know the science behind, you can study that science.” I think we have a better society.

I walk through multiple examples in the book because when you talk about this, you can alienate people very easily. You jumped right into religion, but if you talk about religion, it really gets people's hackles going and they get all riled up.

I had one--one time someone sent me a series of emails about how wonderful our work was and how he was fascinated by it and how he thought it was the most important work that's being done in science today. And then, like, two weeks later he wrote me an email says, “I just learned you're not religious. I take it all back. I don't believe any of that stuff.”

So first of all, I just pointed it--some of these topics that are problematic and addressed.

So in my book, I start off with some very simple thing. I say like, well, how can two people, like, someone who has- who loses a limb and believes that the limb is still there, it's called Phantom Limb, and they perceive that their limb is still there. And that's a false belief because even though they know that the limb is not there but they perceive it's there.

They feel it; they can feel pain on it. Soo that's- that's a non-controversial example. Then I talked about people who believe the earth is flat. Not many people believe the earth is flat, so I didn't run the risk of alienating too many people. I walk through a series of examples like, how you can take historical facts and hold different beliefs about them.

So the point is you don't want to just jumping into these topics that are--that really get people riled up. I, you know, jump in, just talking about vaccines or climate change or something like that or religions. But in the end, we should all know that we're all subject to false beliefs about all these things.

And even if we don't agree, we can least agree that we have to understand that we may not be right about these things. And then if we agree that we may not be right, then at least we can get to go back and say, "Okay, let's look at the evidence and maybe we can figure out where the truth is in this."

Ultimately, I think many of the ills that we have in society are because people have different beliefs about things. Many of the wars we have are created by that, and maybe the problems we have in social injustice are about or based on false beliefs. And we could have a better world and better society if at least we all agreed--at least understood--that our brains are prone for this and that we shouldn't necessarily trust everything we hear.

The sponsor of the Remarkable People podcast is the Remarkable Tablet Company, and they want me to ask every guest the same question: How do you do your best and deepest thinking? Because the Remarkable Tablet helps you do your best and deepest thinking because it is a device that helps you focus on one task: note taking. No interruptions with email or social media. It's all about note taking.

Guy Kawasaki: As a brain scientist, where, how, what are the conditions for you to do your best and deepest thinking?

Jeff Hawkins: So I can tell you about my personal experiences about thinking. So I’ve developed a pattern, how I go about my work. I've been doing this my whole life, not just neuroscience, but I did this when I was working in mobile computing as well. You know, you’re confronted with some difficult problems-- something to try to understand and it's very confusing.

All of this data doesn't make sense and you're trying to figure out what's going to happen. What's the future? What's reality here? And so what I do is immerse yourself in as much data as you can. In my case, as a neuroscientist, I read papers--lots of them. They are very hard to read even when you're a neuroscientist.

But I've read thousands of them in my life. They're really hard to read. And you immerse yourself in the data and sometimes it just makes it worse. It's just like, Oh, you--your head swimming in concepts and you're trying to figure out what the hell is going on. But then what happens is I have to be patient. I say to myself, "Don't force yourself to come to an answer yet."

Just be patient. It'll come to you eventually. And so don't fret about that you don't understand it yet. And then where the best thinking actually occurs to me turns out I would say three quarters of the time the insights I get are in the middle of the night. I wake up--this is a habit of mine-- I usually wake up somewhere between two and four in the morning.

I will lie in bed. I will not turn the lights on. I will try to stay awake, but sort of at a semi-lucid state, and during that period of time where it's almost a little bit like dreaming, all this sort of- the what I call “constraints”-- are sort of relaxed in your brain, and then answers appear, just instantaneous, "Oh, now I understand the answer to that problem."

Or at least I have an idea how to solve that problem. So many of the insights I’ve personally have had, it's been in the middle of the night. I don't know if that works for other people, but for me, that works.

Guy Kawasaki: And how do you prevent forgetting it if you fall asleep before it goes into long-term memory?

Jeff Hawkins: Well that's very funny because my wife says, "Why don't you write it down? Why don't you write down?" I don't know why I don't, but I don't. And what I figure is, if it's a good idea and I don't remember it, it'll come back again. I can't actually prove that happens, but I think it does.

Once I’ve come across an exciting idea in the middle of the night, I'm usually thinking about it now for the next forty minutes or so, "Oh, that's a good idea to think about." So in the morning I usually remember it. Of course, I wouldn't know if I didn't remember…

Guy Kawasaki: But then it wouldn't have been a good idea.

Jeff Hawkins: Yeah, well, who knows? Maybe it was the best idea and I've forgotten them. But enough of them I do remember. It's just a personal style. I don't think everyone works like that. That works for me. Other people might do it like when they’re running or they're exercising or when you're trying not too hard to think about the problem. That's when the answer comes.

Guy Kawasaki: I do it when I'm driving a German stick shift car or I'm taking a shower. And now there's a water shortage and I don't have a German stick shift car, so I haven't had a good thought in years...

There you go. This episode was truly brain food. I hope you learned about the old brain, the new brain, the 150,000 brains in your brain, and the promise and limitations of AI.

I'm Guy Kawasaki, and this is Remarkable People. My thanks to Jeff Sieh and Peg Fitzpatrick who make this podcast remarkable every week.

By the way, beginning with the last few episodes. We put a ton, and I mean a ton of effort into the transcripts of each episode.

They are on the remarkablepeople.com website. Please check them out. Tell your deaf friends about them, and also some of you who are not deaf may enjoy reading as you hear the audio.

I'm fully vaccinated and I hope you will be soon too. Until then, please wear a mask. Do this for the health and safety of people who cannot get vaccinated. Mahalo and aloha!

Sign up to receive email updates

Leave a Reply