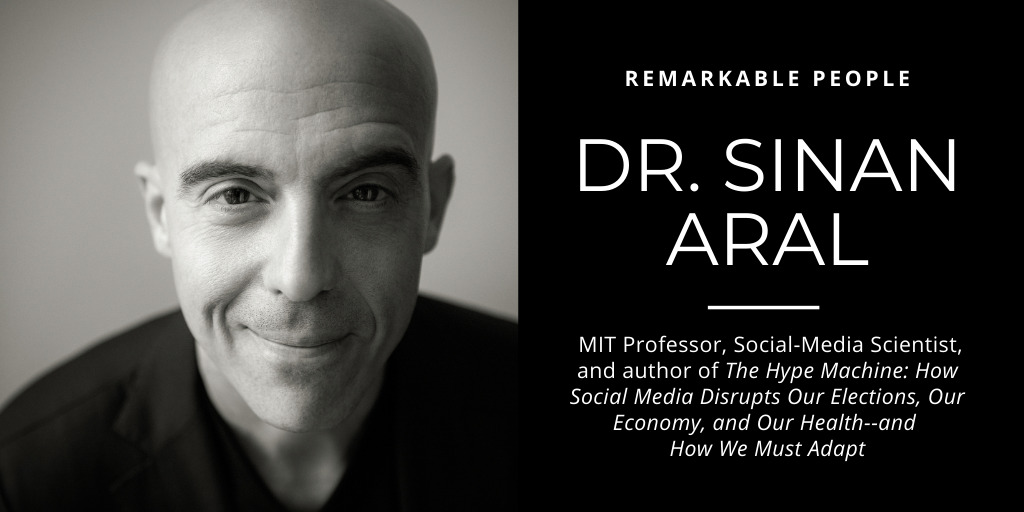

Dr. Sinan Aral is the David Austin Professor of Management and Professor, Information Technology and Marketing at MIT.

He’s a Phi Beta Kappa graduate of Northwestern University. He has Master’s degrees from the London School of Economics and Harvard University. And he has a PhD from MIT.

He recently published a book out called The Hype Machine: How Social Media Disrupts Our Elections, Our Economy, and Our Health–and How We Must Adapt.

In short he is an expert in social media and its impact on society. We cover a lot of ground in this interview including:

- The structural reform of social media

- Data portability between platforms

- The tension between security and privacy

- The inexplicable reality of the Tik Tok deal

- The wisdom and madness of the crowd

- How to determine if a social media account is a bot

- The psychology of online ratings

- Why false news spreads faster, farther, and deeper than truth

- The implications of like buttons

- The long game in marketing and advertising

Sign up for Guy’s weekly email

Thank you for listening and sharing this episode with your community.

This episode is brought to you by reMarkable, the paper tablet. It’s my favorite way to take notes, sign contracts, and save all the instruction manuals for all the gadgets I buy. Learn more at remarkable.com

I hope you enjoyed this podcast. Would you please consider leaving a short review on Apple Podcasts/iTunes? It takes less than sixty seconds. It really makes a difference in swaying new listeners and upcoming guests. I might read your review on my next episode!

Social bots are spreading fake news — and humans are falling for them. NEW podcast with guest Dr. @SinanAral shares how. TUNE IN ▶️▶️▶️ Share on XGuy Kawasaki: With no further ado. I'm Guy Kawasaki, and this is a Sinan Aral. Sinan Aral: Guy Kawasaki: I'm Guy Kawasaki, and this is Remarkable People. Wash your hands. Don't go into crowded places. Wear a mask and listen to Dr. Tony Fauci. Mahalo and Aloha. This is Remarkable People.

Enter your name and email address below and I'll send you periodic updates about the podcast.

I'm Guy Kawasaki, and this is Remarkable People. Today's remarkable guest is a Sinan Aral. I sure hope I pronounced his name right…

He is the David Austin Professor of Management and Professor of Information Technology and Marketing at MIT. He is a Phi Beta Kappa graduate of Northwestern University. He has a master's degree from the London School of Economics and Harvard University. He also has a PhD from MIT.

He recently published a book called The Hype Machine: How Social Media Disrupts Our Elections, Our Economy, and Our Health - and How We Must Adapt. In short, he is truly an expert in social media and its impact on society.

We cover a lot of ground in this interview, including the structural reform of social media, data portability between platforms, the tension between security and privacy, the inexplicable TikTok deal, the wisdom and madness of the crowd, how to determine if a social media account is a bot, the psychology of online ratings, why false news spreads faster, farther, and deeper than truth, the implications of Like buttons, and the long game in marketing and advertising. In other words, there's enough content in this episode to make your head explode.

This episode of Remarkable People is brought to you by reMarkable - the paper tablet company. Yes, you got that right, Remarkable is sponsored by reMarkable. I have version two in my hot little hands and it's so good. A very impressive upgrade.

Here's how I use it. One: taking notes while I'm interviewing a podcast guest. Two: taking notes while being briefed about a speaking gig. Three: drafting the structure of keynote speeches. Four: storing manuals for all the gizmos that I buy. Five: roughing out drawings for things like surfboards, surfboard sheds. Six: wrapping my head around complex ideas with diagrams and flow charts.

This is a remarkably well-thought-out product. It doesn't try to be all things to all people, but it takes notes better than anything I've used. Check out the recent reviews of the latest version.

We're at the pinnacle of crisis. We're seeing a dramatic amount of political and affective polarization in societies around the world. We're seeing the rise of fake news like we've never seen it before and are about to enter a world where deep fakes democratize and make very cheap the ability to fake audio and video at scale.

We seem to be asleep at the switch. We don't seem to be really taking any actions that are likely to turn this around.

Guy Kawasaki:

If you were president of the United States beginning in January, what would you do to reduce or eliminate these problems?

Sinan Aral:

The whole thing begins with competition in the social media economy. One of the biggest questions in that area is the antitrust question. So do we break up Facebook, and will that have any effect? My take is ‘no,’ and the reason is because this economy runs on network effects, which means the value of these platforms is a function of the numbers of users they have.

That underlying market structure exists whether you break up the leading company or not. So if you break up Facebook, it'll just tip the next Facebook-like company into monopoly status.

What we really need is structural reform of the social economy, beginning with data portability, identity and social network portability, and legislated interoperability, like the ACCESS Act that's sitting before Congress now that would force platforms greater than 100 million to inter-operate with each other. That'll create choice, and we've done this in the cell phone market and in the messaging market to great effect. Then if we break up Facebook or not, that's a different question, but just breaking up Facebook is like putting a Band-Aid on a tumor.

Guy Kawasaki:

For people who might not understand it, and just to make sure that I understand it, what do you mean by portability?

Sinan Aral:

Portability is the ability to take your data, your social networks, and other data with you from one platform to another. The really important piece of this is social network portability.

So when Mark Zuckerberg was being questioned by Senator Kennedy in one of the hearings, the Senator said, "Can I take my social network to another social network?" And Mark Zuckerberg said, "Yes, Senator, we have a button that says, 'Download your social network.'" And if you press that button, you get a PDF with a list of all your friends' names, which is not very extensible or helpful.

So a great analogy is the number portability of cell phones. Back in the day, well, we weren't able to take our number with us if we switched, for example, from Sprint to Verizon. That number represented our social network because all of our friends knew to call us there. Once we were able to take our number with us when we switched from Sprint to Verizon, it created a lot of competition in that market. The same thing is true here.

If we are able to take our social networks with us from one social network like Facebook to another, and if messages inter-operate better across social networks, it'll force the platforms to compete on real value design, less fake news, more security, better privacy, rather than just competing on, "We're the network where all your friends are. You have nowhere else to go."

Guy Kawasaki:

Let's say that you have one million followers in Twitter, you're saying that I say, "All right, I'm out of Twitter. I'm going to go to XYZ platform," I can, “take those followers with me?” What if they're not on the next platform?

Sinan Aral:

They should be able to be with you on any platform. If they're not on a platform, the messaging should inter-operate. In other words, they should be able to receive the messages from the platform you've joined to the platform that they are if they're not on the platform you've joined. If they are on the platform you've now joined or switched to, they should be able to follow you there as well.

This is what we've known for many decades is technical lock-in. Locking us in is really what keeps users in a specific platform and then there's really no incentive to innovate. I've got you, why do I need to provide a better product?

Guy Kawasaki:

To continue to use a phone analogy, when I switch from AT&T to Verizon, I don't take my voicemails from AT&T with me to Verizon. What's the metaphor there for social media?

Sinan Aral:

The metaphor is really simple. So once you switched from AT&T to Verizon, I should still be able to call you from AT&T to Verizon. Maybe the saved messages of the voicemail you lose by switching from AT&T to Verizon, but I should be able to message you and create new voicemails wherever I am.

Frankly, think about it this way: voicemails are one thing. Many people I know, including myself, use Instagram and other similar platforms as a scrapbook. They are our family histories. They are our relationships. There are our very memories. The fact that we can't take those with us when we go to another service is maddening to me.

Guy Kawasaki:

The entrepreneur of Silicon Valley part of me that says, "Oh my God, this is heresy. I mean, my whole life I'm trying to build in something so you can't quit." I guess you're saying that in an enlightened world, the ability to come and go is a feature that you would not pick a service that wouldn't let you go in the event that you want to do to go.

Sinan Aral:

Exactly. When you have the ability to go, and you have the ability as a consumer to vote with your feet, it forces the platforms to innovate and deliver the best service that you want to be communicating with others through the one that gives you the best privacy, the best user interface, the best tools and so on rather than just the one with the keys to your stuff and therefore, you can't leave.

Guy Kawasaki:

Okay.

Sinan Aral:

You're right, I mean, lock-in... I'm an entrepreneur as well and have built several companies, and yes, growth, engagement, and reducing churn, these are all goals that we try to achieve, but how about we achieve those with innovation and quality products rather than database technical lock-in. Yes, there are certain things where it makes sense if the data element or the feature or the content is created as a function of some unique aspect of that platform, maybe it should be unique to that platform but some level of interoperability and portability on commoditized messaging. I mean, a text-based message from one to another shouldn't be something that's owned by a given platform.

Guy Kawasaki:

My impression whenever I listen to politicians talk about this topic, or worse watch them, is that it's a seventy-year-old, could be guy or gal, and some twenty-year-old intern is whispering to them because that intern has the Facebook account. Mark Zuckerberg versus a seventy-year-old senator who's depending on an intern whispering to him or her, "This is what you should ask Mark…” That ain't going to fly. I mean, it's not a fair fight. So how do you think that's going to work? Elizabeth Warren says, "Break up Facebook."

Sinan Aral:

I’ve got to tell you that every time one of these hearings comes on TV, I pop a huge bowl of popcorn and I'm right there on the couch watching this thing. A lot of times, it's like a train wreck. I have to say, it's getting better. The last antitrust hearing in the House was much better than the hearings I saw about six or eight months ago. However, it's still, as you say, pretty appalling.

In addition to competition, there are a number of market failures we have to have hearings about and solve, including federal privacy legislation, free speech versus hate speech, election integrity, fake news, and so on. I call in the book for a national commission on democracy and technology that would be populated by scientists, platform leaders, journalists, activists, policy-makers, and so on, entrepreneurs, investors to really dig in to what's going on under the hood.

I think we got to get rigorous about this. We're well past the point where armchair theorizing about social media and its impact about the world is going to help us. We need to get a lot more rigorous very fast.

Guy Kawasaki:

How do we thread the needle of privacy and transparency?

Sinan Aral:

This is something I've been talking about for several years, I call it the transparency paradox. So at once, we want these platforms to be open and transparent about what's going on under the hood, how these algorithms are affecting society, how they work and what their objective functions are, and why they're doing what they're doing. We want access as scientists and as policymakers to data and the algorithms to see how things work, but also, we're calling on these platforms to lock down and be secure and private and don't share the data with anyone.

Remember, the Cambridge Analytica scandal, it originates with Facebook sharing data with a university. So this is truly a paradox, but there is a way to thread that needle, and that is by becoming more secure and transparent at the same time.

A good example is by using differential privacy, which is a form of algorithmic privacy that allows you to share data at the individual level but keep everything anonymous. It adds a bit of noise to the data so that you can make reasonable, scientific conclusions but having the data be obfuscated enough that you can't tell anyone's particular data. That's an example.

Guy Kawasaki:

I have to tell you that when I was reading your book, and you cite study after study of people who got Facebook data and did this and got Twitter data and did this, and now when I go to Facebook and Twitter I think, "Am I part of some study where they're purposely sending me stuff to see if I'm in the bubble or I'm not in the bubble or am I reacting?" My head is exploding as I'm reading your book, and I say, "Well, what do I do about... "

Sinan Aral:

Guy, I can guarantee you that you are not in a study, you're in thousands of studies at the same time. It's happening on every platform - Facebook, Twitter, Google - they're running thousands of randomized experiments every minute. You are in a control group or an experimental group on every platform at every moment of the day. That is the truth.

Guy Kawasaki:

I feel like we're in “Men in Black,” and you open the little thing and we're in a simulation.

Sinan Aral:

It's like, "My wand, where's my wand?"

Guy Kawasaki:

Are you're going to make me forget that?

Sinan Aral:

You're right. Correct.

Guy Kawasaki:

So I can just imagine, but I have to ask you, so what do you think of the TikTok deal, which I described as, "Well, we'll sell off the American assets and that'll protect the privacy of people who live in America from the Chinese spies." My head explodes. That is such a... Okay, I won't say anything. What do you think?

Sinan Aral:

Yeah. I mean, I am still scratching my head over this one, the whole concept of bifurcating a complex network like TikTok where everybody follows everybody else across geographic borders and that you're going to somehow draw a legal line in the sand between American data and Chinese data and American users who are intimately connected and interwoven with Chinese users watching TikToks in both directions.

Whose data is where at any time? What does even that mean? Is it with the server on which it resides? Is it where the person is? When they watch a Chinese TikTok, where does that reside? None of that makes any sense to me in terms of what the legal way to define it is. We're going to have to really get under the hood and figure out how do we marry law and technology in this circumstance to figure that out.

I think that we see a lot of executive orders that aren't... This was an executive order. There was going to be a ban on TikTok, then it was pulled back. There was some backroom dealing and so on. It's ad hoc. It seems very ad hoc, and I think we need to be more principled.

Guy Kawasaki:

Now, it seems to me that Larry Ellison, CEO of Microsoft, CEO of Oracle, CEO of Walmart, they are intelligent people, so what is going through their brain when they say, "Hey, Donald, we'll buy the American assets of TikTok? What am I missing here?

Sinan Aral:

I think the logic of buying TikTok makes sense. What I don't understand is the application of the line in the sand and the law of Chinese TikTok separated from American TikTok will be governed in these very distinct, bright line ways. I think that's very hard to do technically.

I think the reason why Oracle wants to buy TikTok is because they realize, as I say in the book, that the social network is the computer now. The human network connected by digital connections where information traffic is routed by algorithms is the computer now. You have to have a foothold in that ecosystem in order to be part of the future.

Oracle wasn't the only one, right? Microsoft was first, and then Oracle came through in the end. Microsoft bought LinkedIn, right? So this is happening because this is the next evolution plateau of innovation.

Guy Kawasaki:

Okay. I guess it's above my pay grade. I want to get some real practical advice now. Number one: how can you tell what's fake news?

Sinan Aral:

People say, "How are we going to solve the fake news crisis?" My first instinct is to always go into the policy wonk explanation about, "We need these laws and we need these changes to the algorithms,” and then they stopped me and they say, "No, how am I going to stop fake news?"

When I see fake news come across my social media, it's usually preceded by the preamble, "I don't know if this is true, but it's really interesting if it is," and then we share it. We’ve got to stop doing that. It's designed to be really interesting if it's true, but it's not – just being a little reflective.

As I describe in the book, there's a lot of science that shows that if you are reflective and if platforms nudge us to be reflective, that we are less willing to believe fake news, and we're less likely to share fake news.

Second, I think that the eighty/twenty rule applies to fake news. The vast majority of the fake news that's floating around today can be debunked with a few Google searches and a few clicks. So just taking a moment to do that before sharing or believing is a great idea.

Our research also shows that fake news is salacious - it's surprising, it's disgusting, it's anger-inducing, it's blood boiling. So take your emotional pulse. If you feel your emotions rising in anger, say, "Wait a minute, is this really true?” And finally, check the original source.

A lot of these fake news stories come from websites that are masquerading as real websites but they have a slight difference in the URL or there's a typo in the header or they use a lot of all caps. Just checking out the original source can go a long way to rooting out some of this fake news.

Guy Kawasaki:

Do you think starting at ten there should be education about, "This is basically what to believe when your cell phones shows you something."? I mean, don't we need some digital literacy? I mean, I think even calling it digital literacy is not doing it justice. It's literally literacy today, right?

Sinan Aral:

Yeah. There are studies that show that this can be effective. There have been games for kids designed to teach them how to spot fake news. Google has some interesting programs on this.

I definitely think we need more digital media literacy. The jury is still out on exactly how effective it is because that research takes a little bit longer, but I certainly think we have to prepare our kids.

I have a seven-year-old son. The pandemic has changed everyone's life. Before the pandemic, he got no screen time, essentially. He didn't watch TV or movies. He didn't use the pad or play with the phone. Now he can fix my laptop… it's only been a few months because he's forced to connect with his friends over social media. He has to Zoom in to his virtual classroom, and so now he's a pro in just a few months, and there's nothing we can do about it.

Guy Kawasaki:

What happens if he sees a tweet or something that says, "The White House was bombed and Barack Obama died."?

Sinan Aral:

Yeah. Yeah. That's a real tweet - I tell that story in my book - that tweet sent the market crashing, and we lost $140 billion in equity value in a number of minutes. I give my son very strict rules around social media. He's got his own device that isn't connected to all those other things with notifications.

Guy Kawasaki:

That you know of.

Sinan Aral:

Exactly. He's probably already connected it, right? Exactly.

Guy Kawasaki:

He's got two million followers on TikTok. I hope you understand that.

Sinan Aral:

Probably. Probably. We try to set rules around it. We try to limit it. We try to turn notifications off. We try to monitor what he's doing. Some tools like Facebook Messenger Kids allows the parents to monitor who he's connected to and what they're doing and so on, which I think is relevant at that age.

Guy Kawasaki:

How do you handle when you want to figure out if someone or an account is a bot? How do I know that this isn't a Russian bot telling me that, I don't know what, “The police in Portland are getting killed”?

Sinan Aral:

Yeah, I think that the bots for humans, they're harder to discern. There are a lot of good bot detection algorithms that can root out bots and take out bots. The platforms use them. We've developed them at MIT at the Initiative on the Digital Economy, which I direct.

For humans, a lot of these bots are incredibly sophisticated and able to kind of... And remember, the interactions that we have on Twitter and these kinds of places, they're shallow, right? They don't last that long.

Am I having to bring out the wand again? They're really shallow, Guy. No.

Guy Kawasaki:

Let me write that down.

Sinan Aral:

Tell all your friends. They only last a couple of exchanges back and forth. In that kind of environment, they can masquerade pretty well as being a human. I think that one way to tell is that they are not good at exception handling.

So if you throw them a specific piece of information or a specific question that has to do with something that would be in the human zeitgeist but a bot wouldn't really fully get, it's generic enough, that could throw them off, and you might be able to tell that way, but in a few back and forth tweets, it's hard.

Guy Kawasaki:

So what's an example? So if I think something's a bot, then I say, "So what was the weather in St. Petersburg today?"

Sinan Aral:

No, they'd probably answer that better than a human.

Guy Kawasaki:

Okay, so what do I ask this supposed bot?

Sinan Aral:

What do you think about that TikTok deal? Are they going to be able to separate those networks? Or maybe something about Mark Zuckerberg's comments. Or maybe something about feelings, things that only human beings would really be able to answer. Feelings are probably not a good one, they can mimic those pretty well.

Guy Kawasaki:

Okay.

Sinan Aral:

They just use the adjectives.

Guy Kawasaki:

Okay. Next tactical question: how do we distinguish between a wise crowd and a stupid crowd?

Sinan Aral:

This was an awesome book that you may have read by James Surowieck called The Wisdom of Crowds back in 2004. I mean, fantastic book. I loved it. It's one of my favorite books and built really on a theory that Sir Francis Galton espoused 100 years before that, in 1904 or 1906.

The Wisdom of Crowds is great, right? The idea is that a group of people thinking together is wiser than any individual, even the most expert individual, of that crowd thinking by itself. The problem with the wisdom of crowd’s theory is that it was written down by James Surowieck in the book in the same year that Facebook was invented.

The wisdom of crowd’s theory, okay, it rests on three pillars. One is that the opinions of the crowd are independent of one another. Two, that there are diverse opinions in the crowd, and three, that the members of the crowd have equal voice. Well, it turns out that social media essentially destroys all three of those pillars-

Guy Kawasaki:

Mm-hmm. Yes.

Sinan Aral:

... and turn our crowd wisdom into madness. The wisdom of crowds will be able to find the truth more accurately and faster than individuals. Wise crowds are more creative than individuals by themselves and are better at problem-solving than individuals by themselves, but they have to be aggregated and put together in a way that makes them wise.

The way that we're constructing our society on the back of social media tends towards madness rather than wisdom. Part of this is political polarization, right? The idea that we're being bifurcated, at least in the United States, into these two opposing political camps that essentially hate each other now, and the rise of political polarization eerily mirrors the rise of Facebook. In the book, I go through the detailed scientific evidence on whether echo chambers exist, whether filter bubbles exist and whether that can explain the dramatic political polarization we see today.

Guy Kawasaki:

Would you say that Wikipedia is an example of wisdom?

Sinan Aral:

I think it's pretty wise if you think about it. So there've been studies done that showed early on that Wikipedia was at least as accurate as the expertly put together leather-bound Encyclopedia Britannica very, very quickly. I think that there is a tremendous amount of wisdom there.

The thing that makes Wikipedia amazing is that it scales wisdom rapidly. It doesn't take months and years to create a leather-bound encyclopedia. It is constantly in real time updating itself through the crowd and through the moderation and editing processes that it has to stay current and to stay pretty darn accurate in a crowdsourced wise crowd sort of way.

Guy Kawasaki:

Okay. Another sort of wisdom of the crowd question is: when I go to Amazon and I see that your book is rated 4.9 stars by 500 people in aggregate, do I say that's the three conditions of wisdom of the crowd? So it's good information. Or do I say, "That's madness? He's in a tech bubble. All the MIT alumni gave him five stars." How do I interpret Amazon ratings?

Sinan Aral:

Yeah, I wrote a bot to give myself stars.

Guy Kawasaki:

Can I get access to that?

Sinan Aral:

Right. Right. Yes, it's very expensive, guys, very expensive.

Think about it this way: if we were to randomly poll all of the consumers of a product, we would expect that their opinions of that product would be like a bell curve. Most people would have an average experience. Some people would have an extravagantly good experience, and some people would have a really horrible experience, but they would be in the tails.

Most of the people would have an average experience. That's the truth of this normal distribution, this bell curve about outcomes in society, generally speaking, but when you look at Amazon five star ratings, they have a J curve. So there's a lot of ones, less twos, less threes, and then mostly fours and fives. So it's a J curve from the one to the five.

There are three reasons for that. One is there's a selection bias. So only people that have a great experience or a horrible experience really feel the need or the motivation to write a review. If it was kind of eh, you're not going to write a view and say, "I'm really motivated to say, 'eh,' in my review." It's either, "Wow, I loved this." or "I hated this so much. I have to have a review."

We did a study that was published in Science that showed social influence bias, which is that we heard on the ratings that come before us, and I'll give you an anecdote. So I wanted to write this restaurant that I went to in Washington Square Park. I thought it was an average restaurant, average food, average service, average ambience, and I wanted to give it three out of five stars. I went to Yelp, and right next to where I would rate was this bright red five-star rating waxing poetic about how the ginger sauce was amazing and the prices were great. And I was like, "Huh, she's got a point. Yeah, it’s a lot better than I remember." So I gave the plates a four instead of a three.

We did a very large-scale experiment on this where we randomly manipulated ratings. We gave some people an extra rating and gave some people a minus rating, and we saw what happened. It turns out that this dramatically influences the rating.

A single, randomly assigned, experimenter assigned up vote at the beginning of the rating cycle increased the average ratings of items by twenty-five percent over time. So it just snowballs into this path dependence of ratings.

So the answer is that you can learn a lot from ratings, but there are some systematic biases in ratings that are well-known that don't make it the aggregated opinion, objective opinion of the crowd. There's a lot more going on there in the dynamics.

Guy Kawasaki:

I'm hesitant to tell you what I do for my books. It's not unethical, but I guess I internalize what you just said. I might cut this from the actual final episode, but I'll tell you what I do.

Sinan Aral:

Tell me.

Guy Kawasaki:

I solicit a lot of feedback prior to "finishing" a book. Over the course of writing the book, I send out the outline nine months before I end and then I send out drafts and then I sent out the final. I keep a database. I go on my social media, and I say, "I'm writing a book. I'd like your input. I'd like your feedback. I'd like your copy editing, et cetera."

Sinan Aral:

Fantastic.

Guy Kawasaki:

Over the course of several of these things... I look at every comment and make changes, so I really use this. So now I have a database of 2000 people who've gotten the manuscript, 500 people who've actually done something and then the day that Amazon releases the book, I send an email to 2000 people, and I say, "Listen, do me a favor, go rate the book. You have read it. You're legitimate, go rate the book, do me a favor."

Sinan Aral:

Yeah.

Guy Kawasaki:

The next day, I wake up and I have 100 five-star reviews.

Sinan Aral:

That's amazing.

Guy Kawasaki:

Now, some of it is because "I write a good book" but also some of it is because they're so tickled pink to get the book early and, "Guy Kawasaki asked me to do this." I know that's working too.

So what you just said is, "Well, if I see the reviews are all five stars, I was going to give it a three star but now give it a four star.” Am I a bad person for this?

Sinan Aral:

I actually recommend in seminars that businesses and entrepreneurs do this. It's not illegitimate because these are all legitimate reviews. You're just encouraging people who have had a personal experience with the product to rate, and much more importantly than rating, rate early because they're going to affect the ratings of everybody that come after them through potential influence bias.

Guy Kawasaki:

I'll tell you that if you start off on day one with 100 five-star reviews, it's very hard to get the average down to two stars at that point, right? I mean, just mathematically, you need a lot of offsets to make the average go so low. Do lies spread faster than truth?

Sinan Aral:

Yeah, so we did this very large ten-year study on Twitter, which we published on the cover of Science Magazine, which studied all of the verified true and false news that ever spread on Twitter from 2006 to 2017. What we found was that false news traveled farther, faster, deeper, and more broadly than the truth in every category of information that we studied, sometimes by order of magnitude, and in fact, that false political news was the most viral of all the false news, and that false news traveled six times faster than the truth. This is an oft-cited statistic.

Sasha Baron Cohen mentioned that in his remarks before the Anti-Defamation League. Tristan Harris mentions it in the Social Dilemma. A lot of people have heard this result, and it's a true result.

Guy Kawasaki:

Now, it...

Sinan Aral:

Did you ask me why?

Guy Kawasaki:

Yeah, why? I mean, yeah, why?

Sinan Aral:

The first thing we thought was, "Well, maybe people who spread false news are different than people who spread true news. Maybe false news spreaders have more followers, or maybe they follow more people, or maybe they're more often verified, or maybe they're on Twitter more often, or maybe they're been on Twitter longer. Then we checked each one of these in turn and the opposite was true for everyone.

So false news spreaders have fewer followers, follow fewer people, less often verified, less time on Twitter, and so on. False news was spreading so much farther, faster and deeper despite these characteristics not because of them. We had to come up with another explanation.

What we discovered was what we call the novelty hypothesis, and we validated this in the data, which is that if you read the cognitive science literature, it tells you that human attention is drawn to novelty, new things in the environment. If you read the sociology literature, you read that we gain in status when we share novel information because it seems like we're in the know or that we have access to inside information somehow.

We check the novelty of false tweets compared to true tweets, and we found that indeed false tweets compared to what each person had seen in the sixty days prior to seeing a true or false tweet, false news was way more novel than the truth across a number of different dimensions, and then we looked at the replies to the true and false tweets and analyzed the sentiment and looked for sentiment words that would express surprise, anger, disgust, and so on.

We found that people were replying to false news expressing surprise and disgust, and were replying to true news expressing anticipation, joy, and trust. So we think that novelty factor, that shock and awe, as well as the emotional blood-boiling nature of it are two of the reasons why it moves so fast online.

Guy Kawasaki:

But novelty is different than truth, right?

Sinan Aral:

Oh yeah.

Guy Kawasaki:

So you could have something that's novel and true or novel and false. So will a novel true thing travel six times faster than not novel, not true thing?

Sinan Aral:

Exactly. Truth is fighting falsity with one hand tied behind its back because in terms of being able to be novel, it's much easier to come up with novelty that's false because it's made up. You can make anything up, right?

Guy Kawasaki:

True.

Sinan Aral:

You're constrained to some reality that exists. So I like your question though, the question is, does novel truth and novel falsity, do they travel differently? And we don't know. We need more research on this. What we know to this point is that false news travels farther and faster and false news is way more novel than the truth.

Guy Kawasaki:

So if people want to up their social media game, they should post novel true things, right?

Sinan Aral:

Well-

Guy Kawasaki:

When I re-share something, I don't consider the novelty of it, I consider the importance of it.

Sinan Aral:

Yeah, yeah.

Guy Kawasaki:

Which is maybe the wrong standard.

Sinan Aral:

I think both are important. I think it's the ethical way to up your social media game than novel, true things. A lot of people share novel, salacious, false things to up their social media game. I think that the importance factor is incredibly relevant. I feel the same way as you do.

At the same time, I was speaking to a journalist who gave the off-cited quote, "We report on ‘man bites dog.’" It's a rarity that man bites dog, and so that's the thing that we want to report, the thing that people don't know about. That novelty factor is important, but obviously, the novel truths are the really important things that need to be getting out in society.

Guy Kawasaki:

Can you explain what makes an influential person influential for social media?

Sinan Aral:

First the definition of influence, okay? It's one thing to have a big microphone, and it's another thing to be influential. Maybe you can give me a good person to replace him with, but back in the early days of social media, I used to use Ashton Kutcher because he's one of the first influencers.

He took out billboards on the 405 Freeway in LA that says, "Follow aplusk on Twitter,” right? So he was one of the first to a lot of followers.

I used to do this thing in seminars where I would ask people to raise their hand if they know Ashton Kutcher, if they follow him on Twitter, and all the hands would go up. Everybody knows Ashton, everybody follows him on Twitter at the time. Then I would say, "Okay, now put your hands down. Now give me a show of hands of everybody who's ever done anything that Ashton Kutcher has asked you to do." No hands would go up, right?

That's the difference between having a big microphone, and having influence is about changing people's opinions, beliefs, and behaviors. It's not just about having people's ear and people listening to you.

A great example is if Barack Obama gives a speech to raise money for Joe Biden in the upcoming election and you want to know what the influence of that speech was and he gives it to a group of Democratic donors who have already donated a lot of money to Joe Biden, you want to know what the delta is. You want to know what the marginal increase is caused by that speech. So that's really the definition of influence.

Proxies for influence on social media are things like not only the audience but the engagement with the audience, the idea that you have conversations with the audience, that they talk back and that you talk back to them. You know who's somebody who is just absolutely fabulous at this is Gary V.

Guy Kawasaki:

Yes!

Sinan Aral:

I have sincere opinions about him. He's really amazing at this notion of really understanding what it means to engage your audience.

Guy Kawasaki:

What does he do that's so amazing?

Sinan Aral:

Well, so Gary V, when I started looking into his stuff, I was skeptical at first because there were a lot of... I mean, his books are jab, right hook and crush It. He's got cartoons and pictures of his face next to smiling turds and weird things. I just kept looking at his stuff and looking at his stuff and watching his videos and looking at more social media posts.

Then at some point, halfway down the rabbit hole, I realized, "Wait a minute, he's just proven to me exactly why he's so good at what he does. He's got the one thing that he was after the entire time, which is my attention. He's got my attention. He's got me watching."

He's engaging. He's speaking to his audience. He's doing these live shows where every day he's on with somebody answering their question and he listens to some seventeen-year-old kid in Idaho calls him over Zoom, and he's like this on the Zoom. He's, "Okay, what's your question? Let me know…”

And he's sincere! He's sincere about caring about what the question is and what the answer is and his audience is engaged by that, and he's engaged by his audience.

I think that a lot of that has to do with his understanding of what I described in the book as the attention economy. This is really the next major economy that we have to get our hands on as entrepreneurs, as innovators, as policymakers, as citizens, that the social media economy has scaled in a way the attention economy like we've never seen.

Social media sells attention as a precursor to persuasion, the ability to have a large group of people's attention so that you can, going back to our conversation about influence, influence them whether you're a brand buying ads or whether you're a pop - by the way, people are outraged that there would be a technology that would persuade people like this, but it was television before that, and it was radio before that, and it was newspapers before that.

It's not just selling products or fake news, it's also public health officials trying to get you to socially distance or the Public Health Department of the government of South Africa, which I work with, trying to get you to test yourself for HIV or trying to stop smoking or dirty needle sharing. There's a lot of positive messages that can change behavior as well. This attention economy is about grabbing your attention and then being a conduit for persuasion, for influence as it were.

Guy Kawasaki:

Don't we have a natural tension here in that if the currency is attention, going back ten minutes, the way we get that currency is novelty?

Sinan Aral:

Yeah.

Guy Kawasaki:

Right?

Sinan Aral:

Yeah, yeah, absolutely. Yes, there's a tension. There's an absolute tension, but novelty is not necessarily good or bad. It's okay to root out the good novelty or to separate the good novelty from the bad novelty.

Now, the reason why I call this book The Hype Machine is because in an attention economy, engagement is king. If I have an engaged user base that's logging on reading, listening, and so on, then I have more opportunities to sell that attention if I'm a platform and that's the advertising economy on which all of these platforms run.

How do we get engagement? Well, the low-hanging fruit that they landed on early on was hyping us up is what gets our engagement. That's why I call it the hype machine.

Let's just think creatively for two seconds. We've got this thing called the ‘Like’ button. Why is there a ‘Like’ button? The reason there's a ‘Like’ button is because it allows the platforms to keep score about what lots of people seem to like and are engaged with and therefore, we should show to more people.

Secondly, it is you giving the machine your preferences. "I like this. I don't like that. I like this. I don't like that." Now it learns what you like, and now it gives you more of what you like to keep you more engaged.

It doesn't have to be that way. It doesn't have to be based around the ‘Like’ button. Why isn't there a ‘Truth’ button, or a ‘Wholesomeness’ button, or a ‘This Taught Me Something’ button, or a ‘This Corrected a Misconception I Had button’, or ‘This Helps My Exercise Routine’ button?

So if we rewarded those types of things with likes, maybe we could call them kudos, that's what Strava calls them when you run the extra mile, right?

Guy Kawasaki:

Mm-hmm.

Sinan Aral:

If we got kudos for things that we value in society, would people try to become influencers by producing more of that type of content, like truth and wellness and education? Would those people be listened to more and get a greater share of the conversational attention of everyone? Why is popularity our standard for all metrics of success today? That's a horrible standard for a metric for success.

Guy Kawasaki:

Let's pretend, magically, that Mark Zuckerberg says, "Okay, we'll kill the ‘Like’ button, we'll kill all that other stuff. Now it's Truth or Kudos or whatever." What do you think would happen?

Sinan Aral:

I don't think you need to kill the ‘Like’ button.

Guy Kawasaki:

Okay.

Sinan Aral:

Why not add these other buttons? So I think that polling the crowd about whether you trust what you're reading here, or whether you feel this is enriching your life in some way physically or mentally, whether you're learning something or whether it's improving your physical health, these are important things for the rest of us to know as well. We have similar buttons elsewhere, right?

So like, “Was this review helpful,” is something that you see on Amazon all the time, right? How helpful was this review? That's eliciting a different type of feedback.

What we've done is we've just landed on the lowest common denominator feedback loop that we could possibly find, which is basically like, "Who's the coolest," and that's where we are today. We need to graduate up from that.

Guy Kawasaki:

Knowing all you know, what's your advice to a legitimate and ethical digital marketer?

Sinan Aral:

Chapter six through nine of the book cover all of the tactics and blocking and strategy of digital marketing. I think that there are a number of different things that successful digital marketers really need to do.

Back in 2007, Mark Pritchard, who is the Chief Brand Officer of Proctor & Gamble, went in front of the IAB and gave this rousing speech in marketing speeches as far as marketing speeches go. He called out the entire digital marketing ecosystem and said, "We are no longer participating in this fraudulent system that doesn't measure performance well, that is not transparent, that has a lot of click fraud, that has opaque agency contracts."

He cut his digital marketing budget by $200 million, and the pundits were like, "P&G is going to contract. This is a huge mistake,” and then he doubled the industry average for sales growth at seven-point-five percent. I describe in the book how they did that at Procter & Gamble. It was about not advertising more but advertising more effectively, and I describe how you do that.

You do that by creating an integrated digital marketing and social media optimization program that at its core rests on understanding what the effect of your messages are on changing the beliefs, behaviors, and perceptions of your audience. The key lesson to learn there is the difference between lift and conversions. So I tell this story to my class when they come into my classroom at MIT when I'm teaching digital marketing. I meet them at the door with a stack of leaflets advertising the class.

As they walk in, I say, "Hi, I'm your professor. I'm Sinan. Here's a leaflet. Hi, I'm professor, here's a leaflet." They all get a leaflet. They all sit down. They're sitting down and the leaflet is in front of them on the desk and the first question I ask them is, "What is the conversion rate on that leaflet?" They think for a minute and they go, "100%. Everybody who saw that leaflet took the class."

Guy Kawasaki:

Yeah.

Sinan Aral:

Then I say, "What is the behavior change created by that leaflet?" And they say, "0% because we had all already decided to take this class before seeing the leaflet." That's the difference between conversion and lift.

Lift is a causal concept. What is the causal change in your behavior after seeing my persuasive message? I package that into the entire integrated digital marketing optimization program where that concept of lift is at the core of everything the digital marketing team should be doing today.

Guy Kawasaki:

Using that concept, let's take it as a given that the Russians tried to influence the 2016 election. Maybe they didn't get much lift. Did they really influence the election or not? Do we know?

Sinan Aral:

In my role as a business educator, the two questions I'm asked most often are, one: "Did Russia tip the 2016 election?" And two: "How do I measure the ROI from my digital marketing budget?"

It turns out the answer to those two questions is the same. You would go through the same process to find out what the ROI is in your marketing budget and the Russian interference was on changing the election by figuring out what kind of behavior change did it create.

In the book, I go through all of the evidence that we have today on whether Russia changed the 2016 election. There's two questions you have to ask, "Did it affect voter turnout? Did it affect vote choice?" That's the behavior change and, "Was the spread of the messages, the breadth, scope, and targeting of the messages sufficient to if it changed behavior, make a meaningful change in the election?"

So breadth, scope, and target, we know that they reached 126 million people on Facebook, 20 million people on Instagram, 10 million tweets to accounts with six million followers on Twitter, forty-three hours of YouTube content. This is the consensus of the intelligence community in the Senate Intelligence Committee, right? So the reach was broad, okay? Lots of people saw this misinformation.

Second, we know that they targeted swing states. Research out of Oxford University shows that they targeted swing states. So the reach, scope, and targeting seem sufficient if they can create behavior change to tip the election. Then the question becomes, did they change vote choices? People who are going to vote for Hillary vote for Trump, or vice versa? And did they change voter turnout?

The evidence on vote choice through persuasive messaging, being able to persuade a choice, is that it's incredibly difficult to do. It's highly unlikely that Russia manipulation changed people's vote choices, but the evidence on voter turnout is actually scarier. There is good evidence that persuasive messaging can change voter turnout substantially.

In fact, Facebook did experiments in 2010 and 2012 among sixty million people on Facebook, randomized experiments that showed that a single message to go vote created about 800,000 more votes. We know that George W. Bush defeated Al Gore by 537 votes in Florida.

The answer to your question is we don't know definitively whether it tipped the election or not. I'm fairly confident that it didn't substantially enough change vote choices, and that the reach and targeting was sufficient to change the election if it had an effect. The way that it's going to have an effect in 2020, and the way it would have had an effect in 2016 is through voter turnout. Voter suppression on one side, and voter turnout on the other side.

Guy Kawasaki:

Let's say you're Joe Biden, how would you craft the message to encourage turnout?

Sinan Aral:

I think that one thing that my colleagues in political science have convinced me of is that poll results, like thinking that you're ahead, thinking that your candidate is ahead, can suppress voter turnout. We also know that Russia manipulation is aimed at suppressing voter turnout. So I think that crafting a message that simultaneously communicates how important this election is and how given the electoral process, how much more your vote counts than you might think it does.

When you think about America as a collection of 300 million people, a single vote doesn't seem to matter that much, but when you think about the election as really typically only being decided in a few states, and we don't know in any given election year which states those are end up being as the swing states, those change from year to year, although there's a set that are typically swing states, and that in a recent presidential election as few as 537 votes made the difference. So indicating how important the election is, and then bringing it back down to how your single vote is important, even though it might not seem that it is, is I think the right tack for that messaging.

Guy Kawasaki:

Okay. Speaking of Joe Biden and politics, could you explain how he could use the redirect method?

Sinan Aral:

Oh, yeah. A lot of times we think about these tools as being just a tool of manipulation by Russia or China or nefarious actors, ISIS recruiting, but Jigsaw, run by Jared Cohen at Google, which is the unit that is designed to solve civic engagement problems and to fight terrorism and abuse and so on, KKK, et cetera, online racism, and what they did was they devised this redirect method where they would use digital marketing against the purveyors of hate on the internet like the KKK or ISIS or others. They targeted ads at would be ISIS recruits, for example, or would be KKK recruits, and they sent them to immaculately tailored content that was created to be in context for a potential ISIS recruit that would debunk some of the myths of recruitment that ISIS was putting out there about what life was like in the caliphate.

They would show pictures of long food lines and pictures of ISIS fighters beating up old women and telling narrative stories about what an awful place it is and how it's not what you think it was. Then they would target these ads, for instance, at people who were searching Google for the hotels known to be on the path to the caliphate, like where potential ISIS recruits would stay on their way to the caliphate.

Guy Kawasaki:

Yeah?

Sinan Aral:

Yeah. They would be successful at turning around people's opinions before they decided to go down that road. It's a way of redirecting people that are moving towards hate and racism and violence away from hate and racism and violence.

Guy Kawasaki:

You could be redirected the other way too, right?

Sinan Aral:

Yes. It's a battle for hearts and minds. I mean, ISIS is going to use these tools and Google is going to use these tools to fight ISIS as they've demonstrated. It's a battle for the hearts and minds of the people that are listening. At the end of the day, they're going to listen to these two persuasive sets of messages and they're going to decide, "Should I go join ISIS or not?"

Guy Kawasaki:

What if instead of reducing fake news, we increase fake news so that there was so much fake news from various political perspectives that at some point people just don't believe anything. So rather than believing just one side's fake news, people don't trust news at all. Would that work?

Sinan Aral:

I don't think this is a society that we want to live in.

Guy Kawasaki:

Okay, well there's that.

Sinan Aral:

I don't think so. I see two major problems with that.

One is the obvious, which is we need common ground. So any student of negotiation knows that the first thing you need to bring two sides together is common ground. The thickness pulling us in exactly polar opposite directions is eliminating that common ground between us currently in our society in America, at least, and other places in the world where polarization is really taking hold.

The second is that credibility is so key. So while novelty, shock, and awe is the short game in the attention economy, authenticity and reliability is the long game. What I think is that the true leaders of the new social age will be the ones that realize that aligning the shareholder value of a company or a platform with the society's values is long-term profit-maximizing. Whereas getting cheap clicks through novelty, shock, and awe and falsity is short-term profit-maximizing.

The reason is because the Stop Hate for Profit movement, regulatory backlash, the delete Facebook movement, the Social Dilemma movie, people will churn away from your platform, or if you're an influencer, people will churn away from listening to you if they don't trust you, if they don't think that you're authentic. That is true in the long-term, even though you might get that great spike in the short-term with the fake salacious stuff.

Guy Kawasaki:

Okay. Thank you. I won't try that then. The last question is: what have I not asked you that I should have asked you?

Sinan Aral:

Yeah. I think we covered a good deal of it. One central tenant of the book that is... So I talk a lot about the promise and the peril, the promise and the peril, that it's not all doom and gloom. I'm a big fan of “The Social Dilemma” movie. I think everybody should go watch that movie. A lot of my friends are in this movie, and they cite my research in this movie. I think it's a great movie.

This book takes off where that movie leaves off, and it asks, "What do we do? What can we concretely do now that we've heard the Clarion call that things are not well in the social media economy?"

I think that the thing that I talk about in the book is that it's full of peril, but it's also full of a ton of promise. What we've got to focus on is how do we achieve the promise and avoid the peril. I think that the second to last chapter in the book indicates one thing that makes that very difficult, which is the very source of the promise is also the source of the peril.

In other words, when we turn up speech, we turn up harmful speech, and we turn up also truth. When we turn up economic opportunity, we also, at times, turn up inequality. When we turn up security, sometimes we put our transparency at risk, and when we open up transparency, sometimes we put our security at risk. So there's a number of trade-offs that require us to be rigorous in order to achieve the promise and avoid the peril, and I think that's why we need a lot deeper conversation than we're having right now about this topic.

Before I conclude this episode, I want to read you a review by Steph Bran. "I love Guy's common sense and to-the-point interview approach. I always learn something intriguing as he interviews an impressive collection of innovative thinkers. As higher-ed is in dire need to reimagine its future, I am intrigued with Guy's interviews with Scott Galloway. For my higher-ed colleagues, read this and start rethinking your models." Thanks, Steph.

All of you, please go to Apple Podcasts and review this podcast. Is that too much to ask?

I hope you enjoyed this interview with Sinan Aral. I hope I got his name right… He brings up a lot of issues and offers some really tactical and practical solutions.

I want to thank Peg Fitzpatrick and Jeff Sieh for coming up with tactical and practical solutions for every challenge I've encountered creating this podcast.

This episode of Remarkable People was brought to you by reMarkable - the paper tablet company.

Sign up to receive email updates

Leave a Reply